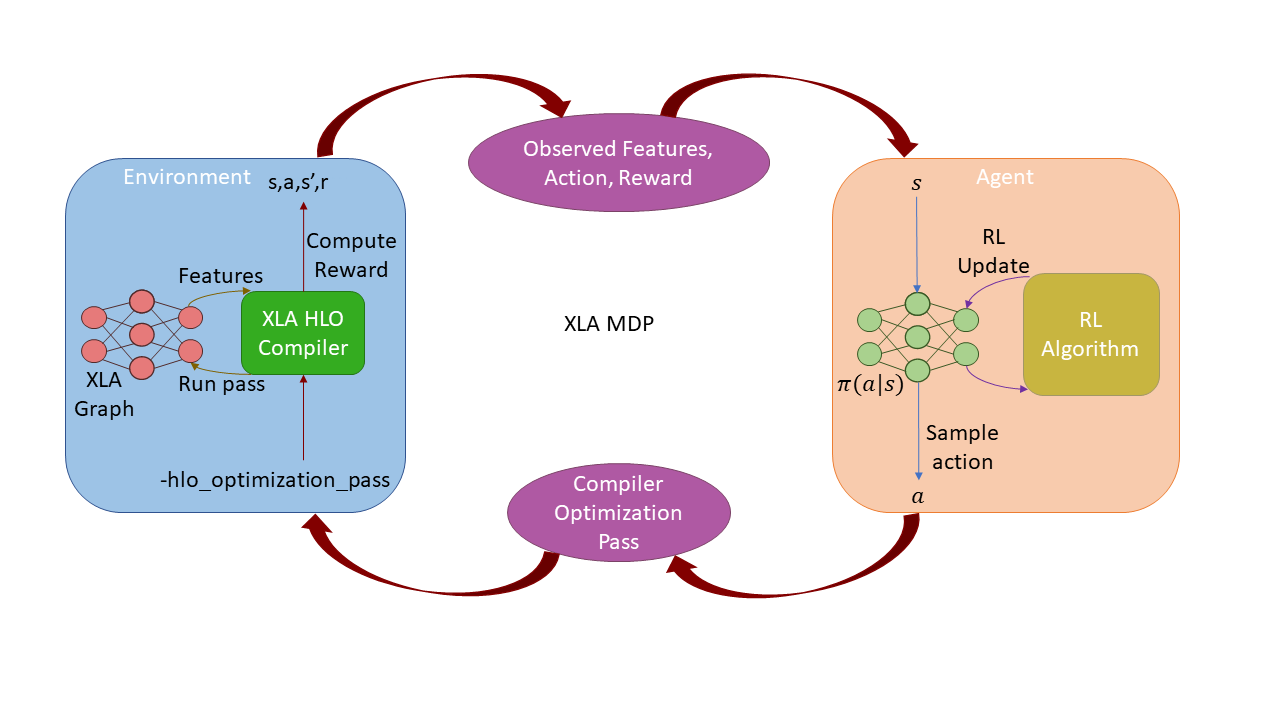

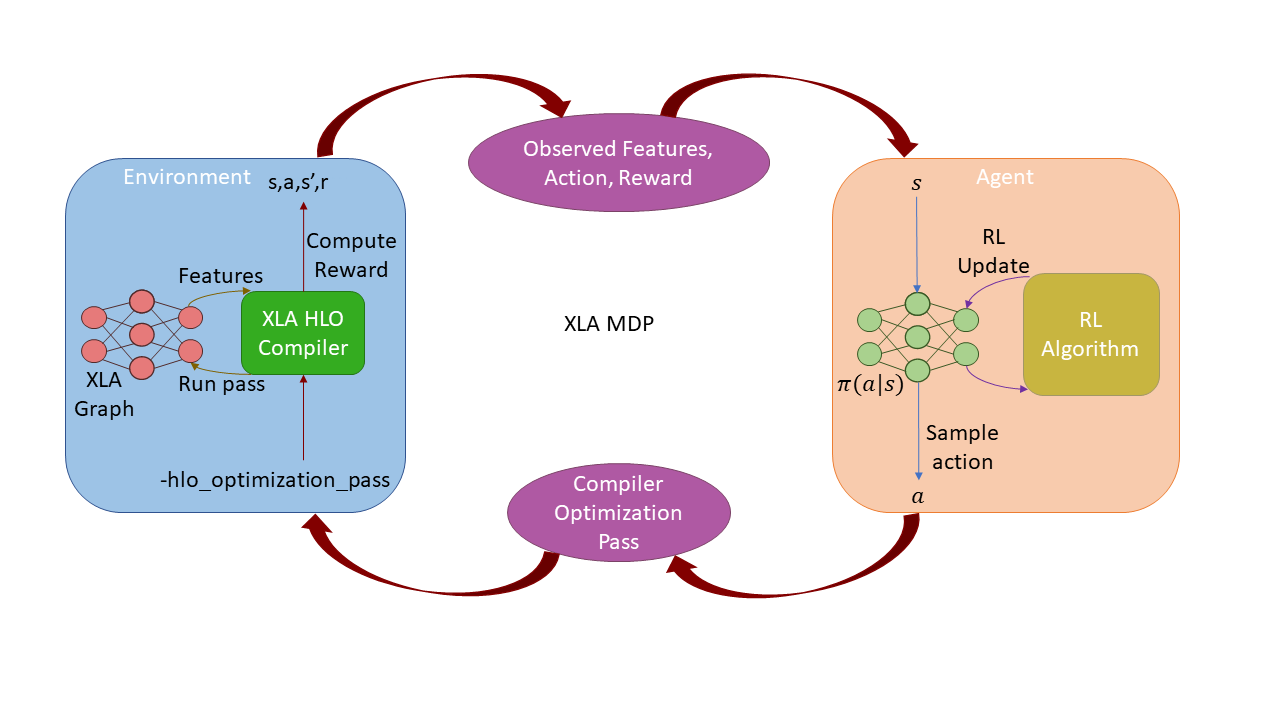

Overall algorithm:

@article{ganai2022tixorl,

title={Target-independent XLA optimization using Reinforcement Learning},

author={Ganai, Milan and Li, Haichen and Enns, Theodore and Wang, Yida and Huang, Randy},

maintitle = {Neural Information Processing Systems 2022},

booktitle = {Workshop on Machine Learning for Systems},

year={2022}

}